CSCI 136

Fundamentals of Computer Science II

Spring 2014

|

CSCI 136 |

|

In this assignment, you will be building a predictive keyboard.

The keyboard learns to make predictions by text given at startup and by things the user types into the program.

As users type, the program predicts the most likely word given the currently entered word prefix.

So for example, if the user has typed th, the keyboard will probably predict the.

You will learn how to use the Java HashMap collection which is crucial to making the keyboard's learning and predictions fast and efficient. |

There are 9 total words in our text. Ignoring case and punctuation, the word the appears twice. The unigram probability of the is thus P(the) = 2/9. The unigram probability of cat is P(cat) = 1/9. The other six words: is, in, corner, of, their, garage all also have a probability of 1/9. If a word has never been seen, its probability is zero: P(zebra) = 0/9 = 0.0.The cat is in the corner of their garage.

public class DictEntry

------------------------------------------------------------------------------------------------------------------------------

DictEntry(String word, double prob) // create a new entry given a word and probability

DictEntry(String word, double prob, String filename) // create a new entry given a word, probability, and audio filename

String getAudio() // getter for audio filename

String getWord() // getter for the word

double getProb() // getter for the probability

boolean matchPattern(String pattern) // does this word match the given pattern?

public class WordPredictor

-----------------------------------------------------------------------------------------

void train(String trainingFile) // train the unigram model on all the words in the given file

void trainWord(String word) // train on a single word, convert to lowercase, strip anything not a-z or '

long getTrainingCount() // get the number of total words we've trained on

int getWordCount(String word) // get the number of times we've seen this word (0 if never)

void build() // recompute the word probabilities and prefix mapping

DictEntry getBest(String prefix) // return the most likely DictEntry object given a word prefix

The wordToCount instance variable is a map where the keys are the unique words encountered in the training data. The values are the integer count of how many times we've seen each word in the data. Only words seen in the training data will have an entry in the HashMap. The total instance variable tracks how many words of training data we have seen. That is, total should equal the sum of all integer counts stored in your map. Training is cumulative for repeated calls to train() and trainWord(), so you just keep increasing the counts stored in your wordToCount map and your total count of training words.private HashMap<String, Integer> wordToCount; private long total;

A Map collection in Java can map multiple keys to the same value. This is exactly what we need in this case since a set of strings (such as wh, wha, whal and whale) may all need to map to a single DictEntry object. You need to ensure that each prefix maps to its most likely word. So even if the word thespian was encountered first in the training data, for any sensible training text, th should probably map to the and not thespian.private HashMap<String, DictEntry> prefixToEntry;

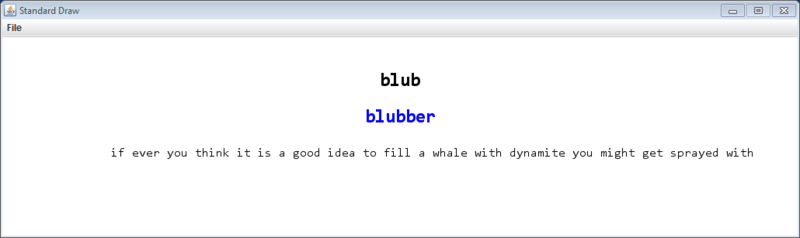

Predictive keyboard program. Product marketing has mocked up a screenshot for your new predictive keyboard product:% java WordPredictor bad1 = null training words = 202 bad2 = null Could not open training file: thisfiledoesnotexist.txt training words = 202 count, the = 10 count, me = 5 count, zebra = 0 count, ishmael = 1 count, savage = 0 count, the = 32 count, me = 5 count, zebra = 0 count, ishmael = 1 count, savage = 1 a -> and 0.03917050691244239 ab -> about 0.004608294930875576 b -> bird 0.004608294930875576 be -> before 0.002304147465437788 t -> the 0.07373271889400922 th -> the 0.07373271889400922 archang -> archangelic 0.002304147465437788 training words = 434 before, b -> bird 0.004608294930875576 before, pn -> null after, b -> bird 0.004576659038901602 after, pn -> pneumonoultramicroscopicsilicovolcanoconiosis 0.002288329519450801 training words = 437 a -> and 0.030079417288752873 ab -> about 0.001472985727769356 b -> but 0.008580499385064212 be -> be 0.0048908846494866 t -> the 0.06757143265738066 th -> the 0.06757143265738066 archang -> archangel 3.3368608719694154E-5 training words = 209778 elapsed (s) : 0.459 heap memory used (KB) : 30245 Random load test: elapsed (s) : 3.447 heap memory used (KB) : 86955 Hit % = 30.0537

| In particular, note the last requirement. This requirement means you can in fact start your keyboard with no data and it will learn words as you go. You can also train the model on some data by providing file(s) on the command line and your keyboard will still learn new words and update its model based on what it sees you type. How cool is that? This program should sell like hot cakes! The video to the right shows the keyboard starting without any training data. Notice how it eventually starts making predictions based on what it has seen the user type. |

Do I need to follow the prescribed APIs? Yes. You may not add public methods to the API; however, you may add private methods (which are only accessible in the class in which they are declared). For example, in our PredictiveKeyboard class, we made a private helper method private String normalize(String text). This method's converts a word of training data and make it lower case and strip any invalid characters. This helped simplify our trainWord() method.

I seem to have repeated code in my train() and trainWord() methods. Is that okay? No, recall our mantra: Repeated Code is Evil™. You should probably refactor your code so the train() method makes use of the trainWord() method.

How do I test if a HashMap contains a certain key value? You can use the containsKey() method to check whether a particular key exists. For further details, check out the HashMap documentation.

How do I test a char variable to see if it is the backspace key? The backspace key is \b.

How do I test a char variable to see if it is the return key? The return key is \n.

How do I represent the char value for a single apostrophe? Yeah that tripped us up at first too. You need to escape the apostrophe with a backslash in the char literal since apostrophes are used to start and end the literal. So for example this code (ch == '\'') tests if the char variable ch is equal to an apostrophe.

Why did you use a long type for the total count of training words but an Integer for the HashMap value? Well we used a long since we were worried somebody might train our keyboard on over 2^31 - 1 (a little more than 2 billion) words of data. We used an Integer instead of a Long in the HashMap since even with a huge amount of training data, it is unlikely we'd see any particular word over 2 billion times. This saved us memory in our HashMap instance variable.

Is there a way to clear out all the data in a HashMap so I can re-fill it based on new training data? Yes. You can call the clear() method on the HashMap object. You could also reinstantiate the object and let the Java garbage collector deal with the old map. Probably the earlier is somewhat more efficient.

Extra credit.

|

Page last updated: December 30, 2014